最新!win11 fairseq安装记录(太多坑了!!!)

我还是在想复现transformer原文的实验,于是按这个查到了一些tips(很有用!发现fairseq这个库似乎很好用,有预训练好的模型,我就开心的跟着官网教程准备试试看。于是一下午都在配环境和报错~~~~耶耶耶~~~~~~~~~~~~~~(疯了别管我了~~~~

我还是在想复现transformer原文的实验,于是按这个查到了一些tips(很有用!)

发现fairseq这个库似乎很好用,有预训练好的模型,我就开心的跟着官网教程准备试试看。于是一下午都在配环境和报错~~~~耶耶耶~~~~~~~~~~~~~~(疯了别管我了~~~~

fairseq/examples/translation at main · facebookresearch/fairseq

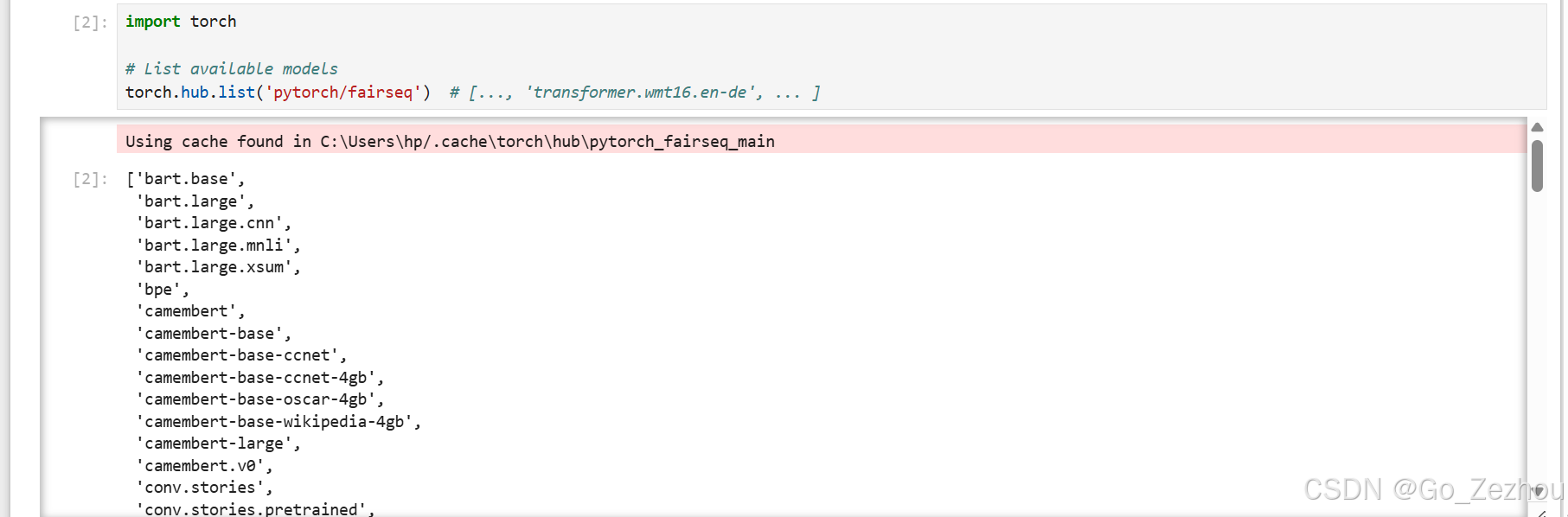

代码:

import torch

# List available models

torch.hub.list('pytorch/fairseq') # [..., 'transformer.wmt16.en-de', ... ]第一次报错:

RuntimeError Traceback (most recent call last) Cell In[2], line 4 1 import torch 3 # List available models ----> 4 torch.hub.list('pytorch/fairseq') File E:\miniconda\Lib\site-packages\torch\hub.py:430, in list(github, force_reload, skip_validation, trust_repo, verbose) 428 with _add_to_sys_path(repo_dir): 429 hubconf_path = os.path.join(repo_dir, MODULE_HUBCONF) --> 430 hub_module = _import_module(MODULE_HUBCONF, hubconf_path) 432 # We take functions starts with '_' as internal helper functions 433 entrypoints = [f for f in dir(hub_module) if callable(getattr(hub_module, f)) and not f.startswith('_')] File E:\miniconda\Lib\site-packages\torch\hub.py:106, in _import_module(name, path) 104 module = importlib.util.module_from_spec(spec) 105 assert isinstance(spec.loader, Loader) --> 106 spec.loader.exec_module(module) 107 return module File <frozen importlib._bootstrap_external>:940, in exec_module(self, module) File <frozen importlib._bootstrap>:241, in _call_with_frames_removed(f, *args, **kwds) File ~/.cache\torch\hub\pytorch_fairseq_main\hubconf.py:35 33 missing_deps.append(dep) 34 if len(missing_deps) > 0: ---> 35 raise RuntimeError("Missing dependencies: {}".format(", ".join(missing_deps))) 38 # only do fairseq imports after checking for dependencies 39 from fairseq.hub_utils import ( # noqa; noqa 40 BPEHubInterface as bpe, 41 TokenizerHubInterface as tokenizer, 42 ) RuntimeError: Missing dependencies: hydra-core, omegaconf

okfine我来安装这两个库(巨坑!!根本没有那么简单)

第二次报错:

Using cache found in C:\Users\hp/.cache\torch\hub\pytorch_fairseq_main

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[4], line 4

1 import torch

3 # List available models

----> 4 torch.hub.list('pytorch/fairseq')

File E:\miniconda\Lib\site-packages\torch\hub.py:430, in list(github, force_reload, skip_validation, trust_repo, verbose)

428 with _add_to_sys_path(repo_dir):

429 hubconf_path = os.path.join(repo_dir, MODULE_HUBCONF)

--> 430 hub_module = _import_module(MODULE_HUBCONF, hubconf_path)

432 # We take functions starts with '_' as internal helper functions

433 entrypoints = [f for f in dir(hub_module) if callable(getattr(hub_module, f)) and not f.startswith('_')]

File E:\miniconda\Lib\site-packages\torch\hub.py:106, in _import_module(name, path)

104 module = importlib.util.module_from_spec(spec)

105 assert isinstance(spec.loader, Loader)

--> 106 spec.loader.exec_module(module)

107 return module

File <frozen importlib._bootstrap_external>:940, in exec_module(self, module)

File <frozen importlib._bootstrap>:241, in _call_with_frames_removed(f, *args, **kwds)

File ~/.cache\torch\hub\pytorch_fairseq_main\hubconf.py:39

35 raise RuntimeError("Missing dependencies: {}".format(", ".join(missing_deps)))

38 # only do fairseq imports after checking for dependencies

---> 39 from fairseq.hub_utils import ( # noqa; noqa

40 BPEHubInterface as bpe,

41 TokenizerHubInterface as tokenizer,

42 )

43 from fairseq.models import MODEL_REGISTRY # noqa

46 # torch.hub doesn't build Cython components, so if they are not found then try

47 # to build them here

File ~/.cache\torch\hub\pytorch_fairseq_main\fairseq\__init__.py:20

17 __all__ = ["pdb"]

19 # backwards compatibility to support `from fairseq.X import Y`

---> 20 from fairseq.distributed import utils as distributed_utils

21 from fairseq.logging import meters, metrics, progress_bar # noqa

23 sys.modules["fairseq.distributed_utils"] = distributed_utils

File ~/.cache\torch\hub\pytorch_fairseq_main\fairseq\distributed\__init__.py:7

1 # Copyright (c) Facebook, Inc. and its affiliates.

2 #

3 # This source code is licensed under the MIT license found in the

4 # LICENSE file in the root directory of this source tree.

6 from .distributed_timeout_wrapper import DistributedTimeoutWrapper

----> 7 from .fully_sharded_data_parallel import (

8 fsdp_enable_wrap,

9 fsdp_wrap,

10 FullyShardedDataParallel,

11 )

12 from .legacy_distributed_data_parallel import LegacyDistributedDataParallel

13 from .module_proxy_wrapper import ModuleProxyWrapper

File ~/.cache\torch\hub\pytorch_fairseq_main\fairseq\distributed\fully_sharded_data_parallel.py:10

7 from typing import Optional

9 import torch

---> 10 from fairseq.dataclass.configs import DistributedTrainingConfig

11 from fairseq.distributed import utils as dist_utils

14 try:

File ~/.cache\torch\hub\pytorch_fairseq_main\fairseq\dataclass\__init__.py:6

1 # Copyright (c) Facebook, Inc. and its affiliates.

2 #

3 # This source code is licensed under the MIT license found in the

4 # LICENSE file in the root directory of this source tree.

----> 6 from .configs import FairseqDataclass

7 from .constants import ChoiceEnum

10 __all__ = [

11 "FairseqDataclass",

12 "ChoiceEnum",

13 ]

File ~/.cache\torch\hub\pytorch_fairseq_main\fairseq\dataclass\configs.py:1127

1118 ema_update_freq: int = field(

1119 default=1, metadata={"help": "Do EMA update every this many model updates"}

1120 )

1121 ema_fp32: bool = field(

1122 default=False,

1123 metadata={"help": "If true, store EMA model in fp32 even if model is in fp16"},

1124 )

-> 1127 @dataclass

1128 class FairseqConfig(FairseqDataclass):

1129 common: CommonConfig = CommonConfig()

1130 common_eval: CommonEvalConfig = CommonEvalConfig()

File E:\miniconda\Lib\dataclasses.py:1230, in dataclass(cls, init, repr, eq, order, unsafe_hash, frozen, match_args, kw_only, slots, weakref_slot)

1227 return wrap

1229 # We're called as @dataclass without parens.

-> 1230 return wrap(cls)

File E:\miniconda\Lib\dataclasses.py:1220, in dataclass.<locals>.wrap(cls)

1219 def wrap(cls):

-> 1220 return _process_class(cls, init, repr, eq, order, unsafe_hash,

1221 frozen, match_args, kw_only, slots,

1222 weakref_slot)

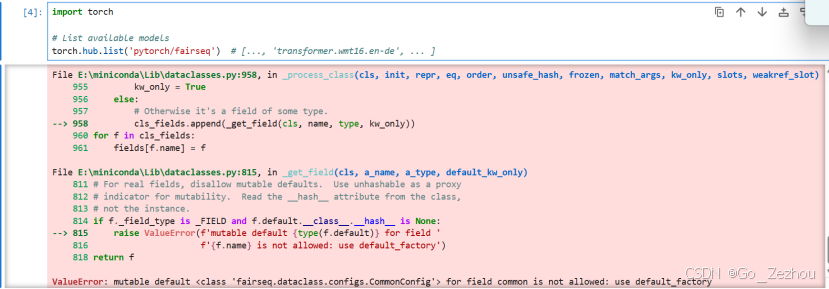

File E:\miniconda\Lib\dataclasses.py:958, in _process_class(cls, init, repr, eq, order, unsafe_hash, frozen, match_args, kw_only, slots, weakref_slot)

955 kw_only = True

956 else:

957 # Otherwise it's a field of some type.

--> 958 cls_fields.append(_get_field(cls, name, type, kw_only))

960 for f in cls_fields:

961 fields[f.name] = f

File E:\miniconda\Lib\dataclasses.py:815, in _get_field(cls, a_name, a_type, default_kw_only)

811 # For real fields, disallow mutable defaults. Use unhashable as a proxy

812 # indicator for mutability. Read the __hash__ attribute from the class,

813 # not the instance.

814 if f._field_type is _FIELD and f.default.__class__.__hash__ is None:

--> 815 raise ValueError(f'mutable default {type(f.default)} for field '

816 f'{f.name} is not allowed: use default_factory')

818 return f

ValueError: mutable default <class 'fairseq.dataclass.configs.CommonConfig'> for field common is not allowed: use default_factory

这里是因为fairseq很久没有更新,在python3.8之后的版本中这个默认值用不了

fairseq在py3.9以后版本上的安装 - 酱_油 - 博客园

我创建了一个虚拟环境,想再试一下能不能行,然而。。。。。

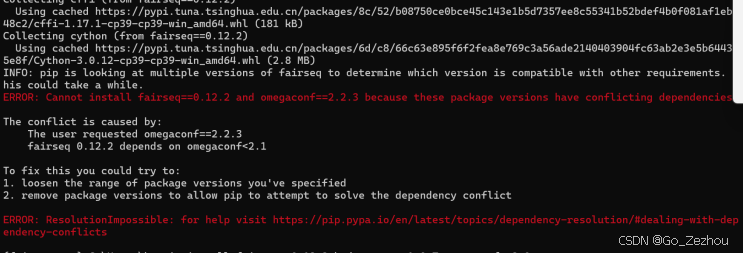

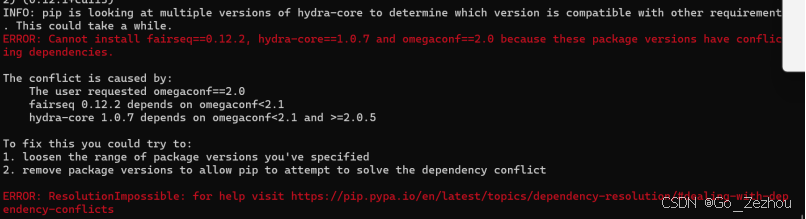

第3-N次报错

全部都是包不兼容的问题。python之间的包互相依赖的关系很严重,我第一次感受到hh。

并且不要安装python3.11。(一年前的自己你听到了吗?!!!!)如果在3.8-3.10的版本应该是不会出错的(崩溃。。)

github上也有一些解答,我试了还是不行。

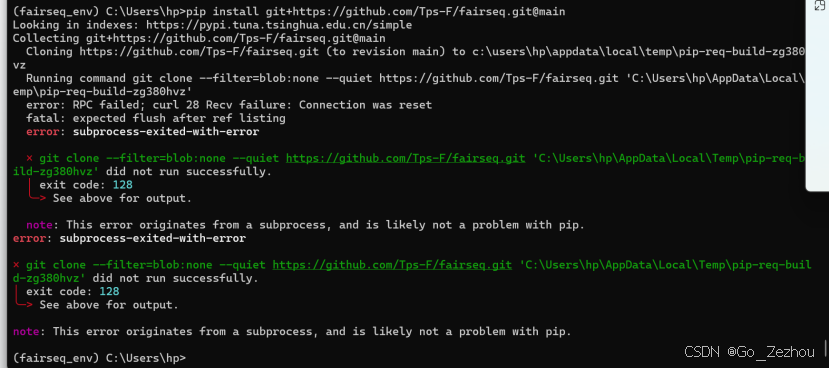

pip uninstall fairseq

pip install git+https://github.com/Tps-F/fairseq.git@main第N+1次报错:

这里貌似是pip+git的方式不稳定造成的。 这里我觉得应该能成功,又参考了pip install git+https:xxxx安装失败解决方法_pip install git+报错-CSDN博客

我git clone的是这个,这里的fairseq应该是改好了的One-sixth/fairseq: Facebook AI Research Sequence-to-Sequence Toolkit written in Python.

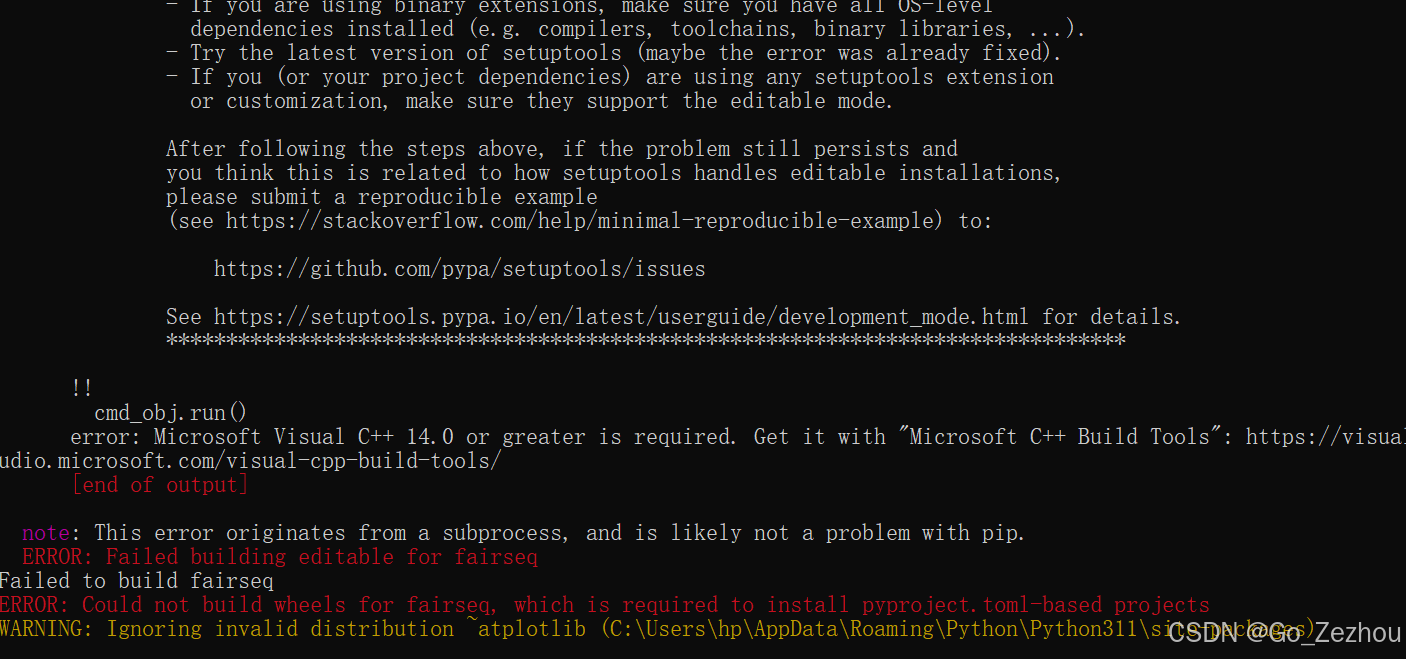

我运行以下命令又遇到vc没有安装的错误:

pip install --editable ./

第N+2次报错:

安装 Microsoft Visual C++ Build Tools-CSDN博客

又重新安装hhh(苦笑

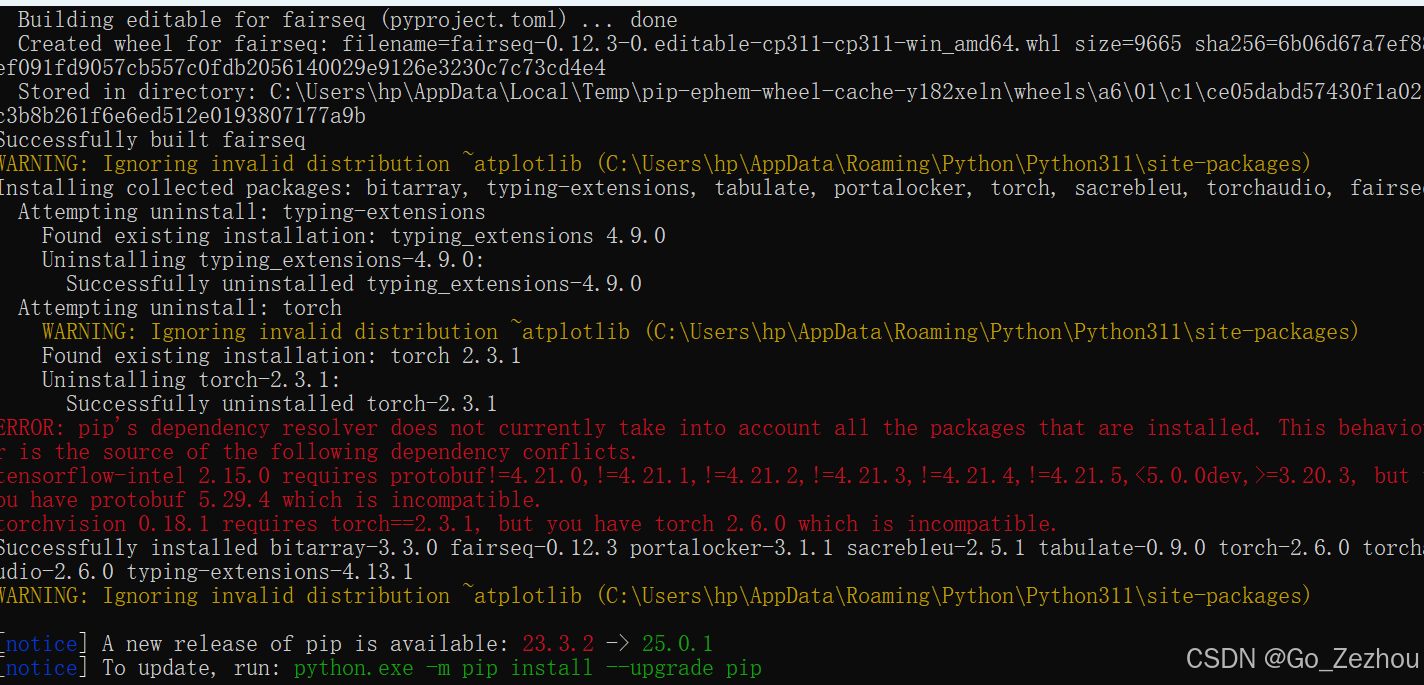

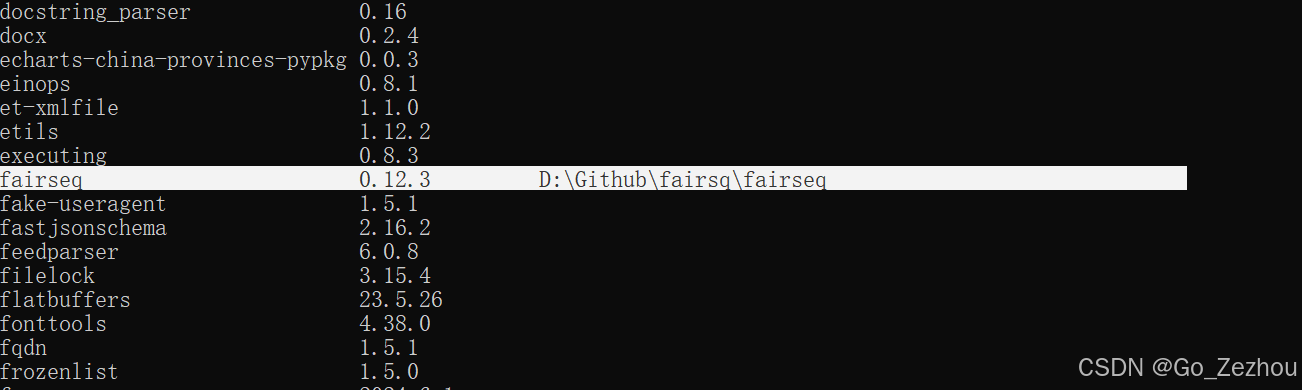

啊啊啊啊啊啊啊啊啊啊啊啊啊啊啊啊啊啊啊这是成功了吗??!!!!!

有了!!待我再去试一下代码~~~~

不管怎么样折腾一下午是应该装好了!!参考了很多经验和帖子!!!感谢!!!!

二编 18:36

还有最后一步啊啊啊啊啊啊啊啊啊啊啊要配置环境变量

在anaconda安装fairseq,并在Jupyter notebook使用_miniconda 安装 fairseq-CSDN博客

请看这篇!最后一步啊啊啊啊啊啊啊感恩!!!!

可以在jupyter notebook上运行了哇啊啊啊啊啊啊啊啊啊啊好开心!!!!

GitCode 天启AI是一款由 GitCode 团队打造的智能助手,基于先进的LLM(大语言模型)与多智能体 Agent 技术构建,致力于为用户提供高效、智能、多模态的创作与开发支持。它不仅支持自然语言对话,还具备处理文件、生成 PPT、撰写分析报告、开发 Web 应用等多项能力,真正做到“一句话,让 Al帮你完成复杂任务”。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)