ResNet-模型架构代码

ResNet(Residual Network)由何恺明等人在 2015 年提出,荣获了当年 ImageNet 挑战赛的冠军。其主要创新在于 残差学习(residual learning),通过残差块(residual block)解决了深层网络中梯度消失和退化问题

目录

🌟 ResNet 网络简介

ResNet(Residual Network)由何恺明等人在 2015 年提出,荣获了当年 ImageNet 挑战赛的冠军。其主要创新在于 残差学习(residual learning),通过残差块(residual block)解决了深层网络中梯度消失和退化问题。

🔑 为什么需要 ResNet?

-

深度网络退化问题:随着网络加深,训练误差并不一定减少,反而可能上升。

-

梯度消失/爆炸:深层网络中,梯度会消失或爆炸,导致训练困难。

传统的网络中,每一层都直接学习期望的映射:

![]()

而 ResNet 让网络去学习残差:

![]()

也就是:

![]()

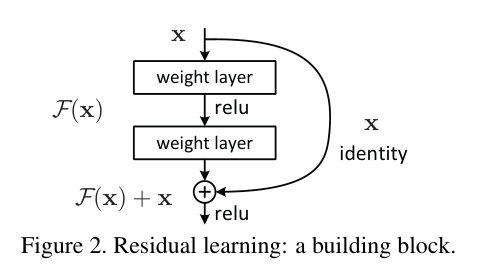

🔧 残差块(Residual Block)

一个残差块可以表示为:

![]()

其中:

![]() :由卷积、BatchNorm、ReLU 等构成的模块。

:由卷积、BatchNorm、ReLU 等构成的模块。

x:shortcut(跳跃连接,直接相加)

当维度不匹配时,通常会通过 1×1 卷积进行变换,使维度匹配。

🔬 ResNet 网络结构

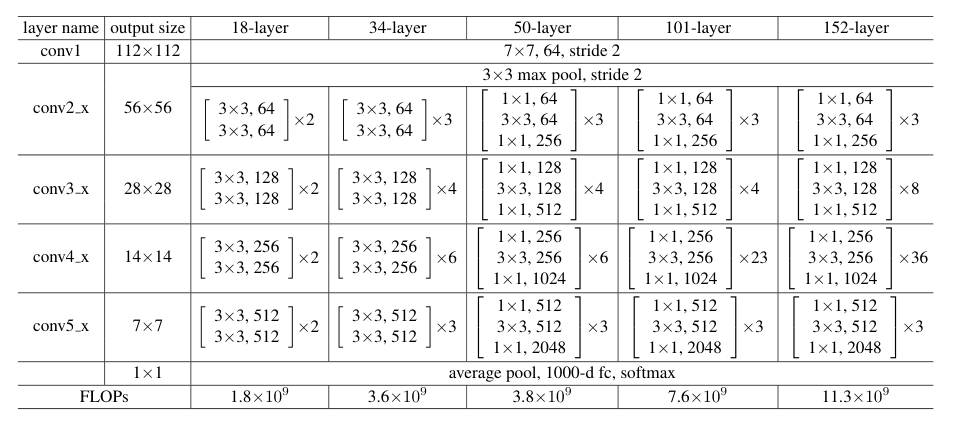

ResNet 设计了多种深度版本,如:

✅ ResNet-18

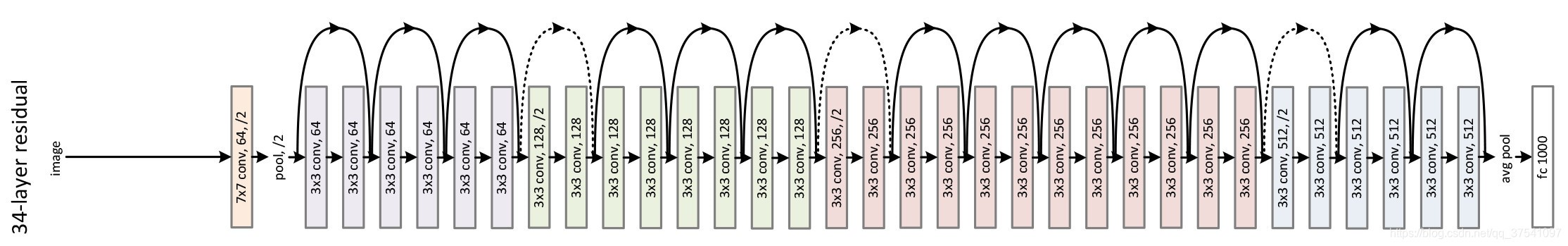

✅ ResNet-34

✅ ResNet-50

✅ ResNet-101

✅ ResNet-152

它们的核心都是将若干个残差块堆叠起来:

-

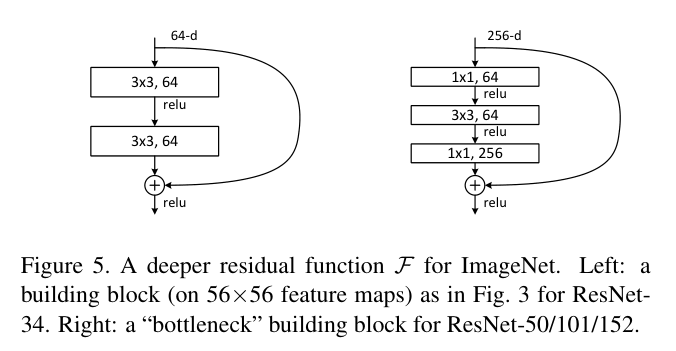

ResNet-18/34:使用 2 个 3×3 卷积的残差块(BasicBlock)。

-

ResNet-50 及以上:使用 3 个卷积的瓶颈结构(BottleneckBlock)。

📈 ResNet 的优势

✅ 解决退化问题,允许更深的网络

✅ 训练效果显著提升

✅ 被广泛应用于各种下游任务(检测、分割、分类等)

1. 模型架构:

2. code:

以ResNet18为例:

import torch

import torch.nn as nn

import torch.nn.functional as F

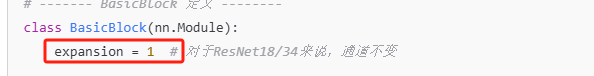

# ------- BasicBlock 定义 --------

class BasicBlock(nn.Module):

expansion = 1 # 对于ResNet18/34来说,通道不变

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.downsample = downsample # 如果需要调整通道或尺寸

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

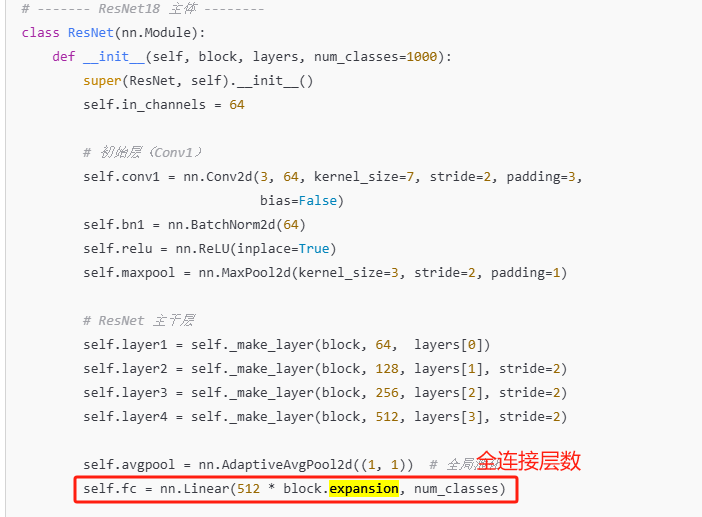

# ------- ResNet18 主体 --------

class ResNet(nn.Module):

def __init__(self, block, layers, num_classes=1000):

super(ResNet, self).__init__()

self.in_channels = 64

# 初始层(Conv1)

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,

bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

# ResNet 主干层

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

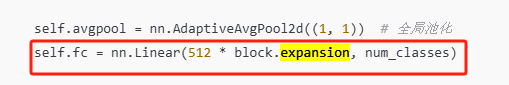

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # 全局池化

self.fc = nn.Linear(512 * block.expansion, num_classes)

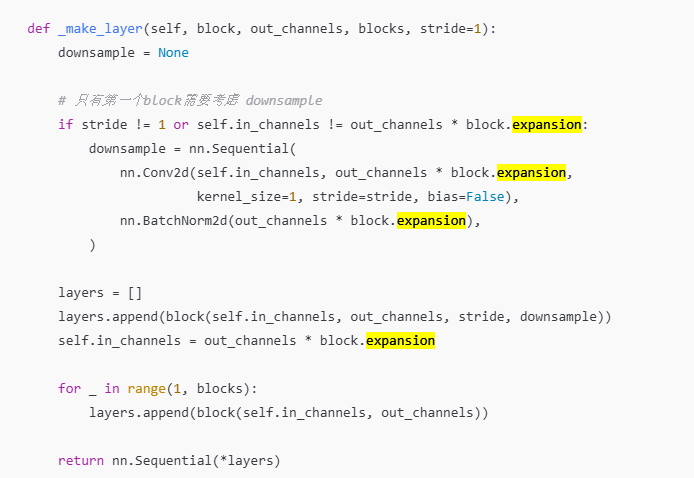

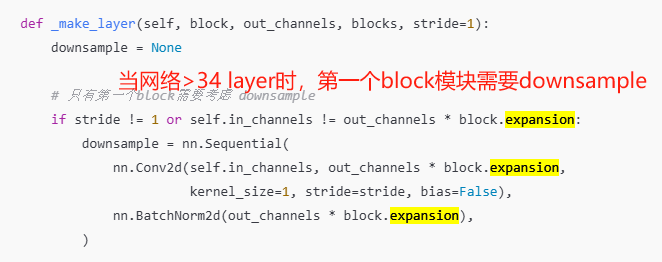

def _make_layer(self, block, out_channels, blocks, stride=1):

downsample = None

# 只有第一个block需要考虑 downsample

if stride != 1 or self.in_channels != out_channels * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channels, out_channels * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(out_channels * block.expansion),

)

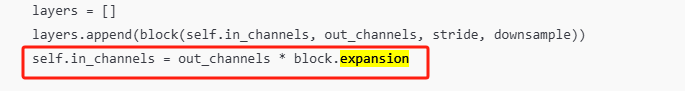

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels * block.expansion

for _ in range(1, blocks):

layers.append(block(self.in_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x) # [B, 3, 224, 224] → [B, 64, 112, 112]

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x) # → [B, 64, 56, 56]

x = self.layer1(x) # → [B, 64, 56, 56]

x = self.layer2(x) # → [B, 128, 28, 28]

x = self.layer3(x) # → [B, 256, 14, 14]

x = self.layer4(x) # → [B, 512, 7, 7]

x = self.avgpool(x) # → [B, 512, 1, 1]

x = torch.flatten(x, 1)

x = self.fc(x) # → [B, num_classes]

return x

ResNet18构建:

def resnet18(num_classes=1000):

return ResNet(BasicBlock, [2, 2, 2, 2], num_classes)

测试代码:

model = resnet18(num_classes=1000)

x = torch.randn(1, 3, 224, 224)

out = model(x)

print(out.shape) # 应输出 [1, 1000]

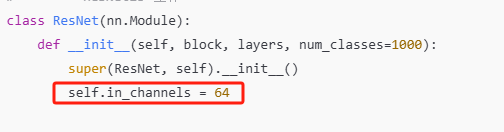

3. 代码理解:

in_channels :记录上一层block输出通道数

定义在ResNet类网络内部:是一个全局变量

会随着卷积层的计算修改in_channels 的值。

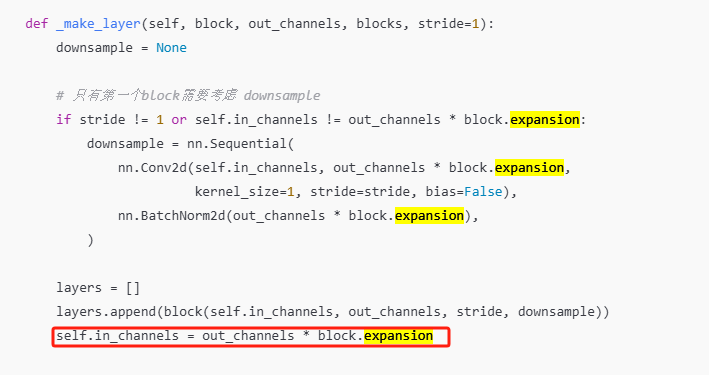

expansion的理解:18和34层网络用的1,其他50 101 152层用的4

定义在block模块:

用到expansion的地方:

作用:

1.更新下一层的input输入通道数

2.参与全连接层的计算

3.用于50层以上的网络:

a.用于downsample的判断,在第一层block计算时进行downsample;

b.用于计算输出分辨率 = 4 x out_channels

c.用于BN通道数量的计算

当大于50层网络时,逻辑走一遍:

第一个block,没有修改stride,但是有expansion=4的参与,满足downsample的条件,进入if语句,经过卷积conv 使输入通道64变为64*4=256,用block末尾的拼接concat。

self.layer1 = self._make_layer(block, 64, layers[0])之后做conv2d(64,64,3,1),这里已经走不通了,少了1x1, 64的卷积操作,之后我问了AI,大于50的网络block模块代码不一样。靠。。。。

layers = []

layers.append(block(self.in_channels, out_channels, stride, downsample))

self.in_channels = out_channels * block.expansion

大于50层网络的block模块:

class Bottleneck(nn.Module):

expansion = 4 # 最后一个输出通道扩大4倍

def __init__(self, in_channels, out_channels, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channels)

self.conv2 = nn.Conv2d(out_channels, out_channels, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channels)

self.conv3 = nn.Conv2d(out_channels, out_channels * self.expansion,

kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(out_channels * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

函数构造:

def resnet50(num_classes=1000):

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes)

调用示例:

model = resnet50(num_classes=1000)

x = torch.randn(1, 3, 224, 224)

out = model(x)

print(out.shape) # [1, 1000]

BasicBlock的理解:只适用于18,34层网络。

block模块内表示的单个循环。conv -> bn -> relu -> conv -> bn -> +res ->relu

内部残差模块用的downsample,在_make_layer中实现:

当输入stride != 1 (填写了stride=2的情况)或者 self.in_channels != out_channels * block.expansion (输入通道!=输出通道)

Bottleneck的理解,适用于50层之后的网络

| 网络 | 使用的 Block 类型 | Block 配置 [layer1, layer2, layer3, layer4] |

|---|---|---|

| ResNet50 | Bottleneck |

[3, 4, 6, 3] |

| ResNet101 | ✅ Bottleneck |

✅ [3, 4, 23, 3] |

| ResNet152 | ✅ Bottleneck |

✅ [3, 8, 36, 3] |

✅ 如何调用

def resnet101(num_classes=1000):

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes)

def resnet152(num_classes=1000):

return ResNet(Bottleneck, [3, 8, 36, 3], num_classes)

示例测试:

model = resnet101()

x = torch.randn(1, 3, 224, 224)

y = model(x)

print(y.shape) # 输出 [1, 1000]

GitCode 天启AI是一款由 GitCode 团队打造的智能助手,基于先进的LLM(大语言模型)与多智能体 Agent 技术构建,致力于为用户提供高效、智能、多模态的创作与开发支持。它不仅支持自然语言对话,还具备处理文件、生成 PPT、撰写分析报告、开发 Web 应用等多项能力,真正做到“一句话,让 Al帮你完成复杂任务”。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)