blender python 代码根据虚拟人物生成相应视频

1、虚拟人物下载(fbx):Mixamo2、虚拟人物设计:可以下载makehuman,然后导出为obj或fbx格式,并且在Mixamo上面进行绑定骨架3、骨架绑定(需要带有界面的GPU机器)自动完成骨架绑定:https://github.com/zhan-xu/RigNet4、也可以利用 :Mixamo上传对应的模型进行骨架绑定5、代码操作部分(相机跟拍、人物动作混合、声音轨道和动作轨道混合):6

·

1、虚拟人物下载(fbx):Mixamo

2、虚拟人物设计:可以下载makehuman,然后导出为obj或fbx格式,并且在Mixamo上面进行绑定骨架

3、骨架绑定(需要带有界面的GPU机器)自动完成骨架绑定:https://github.com/zhan-xu/RigNet

4、也可以利用 :Mixamo上传对应的模型进行骨架绑定

5、代码操作部分(相机跟拍、人物动作混合、声音轨道和动作轨道混合):

import os

import re

import bpy

class ModelProcessingTool:

def __init__(self):

# self.start()

print("finished!")

self.base_data_path = "G:/git_alg/dengta/digital_man/data/"

self.scn = bpy.context.scene

self.head_bone_reg=re.compile(r'.*Spine$')

def add_action_to_nla(self, ob, track_name, action_name, frame_num):

# 在nla中,按照object进行分组,如果是控制骨骼动作,故先获取到骨骼实体,对齐进行插入轨道操作

# action是已经做好的动作,在动作编辑器中制作完成后,存放到非线性动画堆栈中但暂时不启用,而是等待被调用

action = bpy.data.actions[action_name] # !!!关键语句

print(ob.animation_data.nla_tracks.keys())

if action is not None:

# 新建轨道:如果已经有同名轨道的话,就不新建

if track_name in ob.animation_data.nla_tracks.keys():

track1 = ob.animation_data.nla_tracks[track_name]

else:

track1 = ob.animation_data.nla_tracks.new() # !!!关键语句

track1.name = track_name

# 冲突判断:由于同一个轨道上面,多个动作不能重叠,如果要做动作混合,可以新建一个轨道,这里不需要动作混合,如果有重叠,就直接返回错误

action_len = action.frame_range[1] - action.frame_range[0]

action_start = frame_num

action_end = int(action_start + action_len)

print("in")

for act_ani in track1.strips:

exist_act = act_ani

print("dd")

print(action_start, action_end, exist_act.frame_start, exist_act.frame_end)

if exist_act.frame_start > 1:

result = set(range(int(exist_act.frame_start), int(exist_act.frame_end))).intersection(

set(range(action_start, action_end)))

print(result)

if result:

print("error -1")

return False

# 将动作加入到nla中的对应轨道的对应时间段

track1.strips.new(action.name, frame_num, action) # !!!关键语句

return True

else:

print("error -2")

return False

def compute_each_action_start_frame_num(self, action_list, real_action_name_map, sound_object_list,

action_sep_frame_num:int=2):

frame_start_num_list = []

frame_start_num, frame_end_num = 0, 0

for i, each in enumerate(action_list):

real_action_name = real_action_name_map.get(each)

action_obj = bpy.data.actions[real_action_name]

action_frame_len = int(action_obj.frame_range[1] - action_obj.frame_range[0])

frame_start_num_list.append(frame_end_num)

if sound_object_list[i]:

action_frame_len = max(action_frame_len, sound_object_list[i].frame_final_duration)

frame_end_num = frame_end_num + action_frame_len + action_sep_frame_num

return action_list, sound_object_list, frame_start_num_list, frame_start_num, frame_end_num

def move_sound_frame(self, sound_object_list, frame_start_num_list):

for i, each in enumerate(sound_object_list):

if each:

each.frame_start = frame_start_num_list[i]

print("sound move", each.name, each.frame_final_start, each.frame_final_end, each.frame_start)

def move_nla_action_frame(self, nla_init_action_target, action_list, frame_start_num_list, real_action_name_map,

track_name="track1"):

# 轨道名称

for i, action in enumerate(action_list):

self.add_action_to_nla(nla_init_action_target, track_name, real_action_name_map[action],frame_start_num_list[i])

def camera_set(self,target):

camera = bpy.data.objects.new('Camera', bpy.data.cameras.new('Camera'))

bpy.ops.curve.primitive_bezier_circle_add(radius=6, enter_editmode=False, align='WORLD', location=(0, 0, 0.7),

scale=(1, 1, 1))

bpy.context.active_object.name = "camera_orbit"

rot_centre = bpy.data.objects["camera_orbit"]

bpy.data.collections["Collection"].objects.link(camera)

camera.parent = rot_centre

m = camera.constraints.new('TRACK_TO')

m.target = target

bone_result_list = bpy.data.armatures["Armature"].bones.keys()

head_bone = None

for bone in bone_result_list:

if self.head_bone_reg.fullmatch(bone):

head_bone = bone

break

if bone:

m.subtarget =head_bone

bpy.data.cameras["Camera"].lens=45.14

bpy.data.cameras["Camera"].lens_unit="MILLIMETERS"

# 关键代码,必须设置相机,否则无法渲染

self.scn.camera = bpy.data.objects['Camera']

def process_movie(self, action_list, sound_list):

action_list = ["Waving", "thanks","Waving", "thanks","thanks"]

sound_list = ["visit", "thanks","Waving", "thanks","thanks"]

# 删除场景中的全部对象

self.delete_all()

dedumplicate_action = list(set(action_list))

moudle_name = "girl1"

self.load_module(moudle_name)

# TODO 后续加载模型和动作需要分开

# *********************数据加载*********************************

print("load all actions and sound")

real_action_name_map = self.load_all_action(dedumplicate_action)

sound_object_list = self.load_all_sound(sound_list)

# *********************场景预置*********************************

print("hide render message")

self.batch_hide_renders(dedumplicate_action)

print("close self default animation")

self.delete_all_default_animation(dedumplicate_action)

print("prepare camera")

target = bpy.data.objects[moudle_name]

print("照相机设置")

self.camera_set(target)

print("prepare light")

bpy.ops.object.light_add(type='SUN', radius=30, align='WORLD', location=(0, -3, 10), scale=(20, 20, 20))

# *********************动作编排begin*********************************

# 获取编排实体对象

print("compute frame position")

action_list, sound_object_list, frame_start_num_list, frame_start_num, frame_end_num = self.compute_each_action_start_frame_num(

action_list, real_action_name_map, sound_object_list)

print(frame_start_num_list)

print("format sound frame position")

self.move_sound_frame(sound_object_list, frame_start_num_list)

self.move_nla_action_frame(target, action_list, frame_start_num_list, real_action_name_map)

# *********************动作编排end*********************************

# TODO 添加过渡动画

# *********************导出配置*********************************

# 视频导出

self.scn.frame_end = frame_end_num

self.scn.frame_start = frame_start_num

fp = self.scn.render.filepath # get existing output path

self.scn.render.image_settings.file_format = 'FFMPEG' # set output format to .png

self.scn.render.ffmpeg.format = 'AVI'

self.scn.render.ffmpeg.gopsize = 18

self.scn.render.ffmpeg.audio_codec = "MP3"

self.scn.render.ffmpeg.audio_channels = "STEREO"

self.scn.render.ffmpeg.audio_mixrate = 48000

self.scn.render.ffmpeg.audio_bitrate = 192

self.scn.render.ffmpeg.audio_volume = 1

self.scn.render.filepath = os.path.join(self.base_data_path, "output", "test4.avi")

bpy.ops.render.render(animation=True) # render still

def delete_all_default_animation(self, object_name_list):

for each in object_name_list:

self.delete_default_animation(each)

def delete_default_animation(self, object_name):

if object_name in bpy.data.objects:

bpy.data.objects[object_name].animation_data.action = None

def batch_hide_renders(self, object_name_list):

for name in object_name_list:

self.hide_render(name)

def hide_render(self, object_name):

bpy.data.objects[object_name].hide_render = True

bpy.data.objects[object_name].hide_viewport = True

for each in bpy.data.objects[object_name].children:

each.hide_render = True

each.hide_viewport = True

def delete_object(self, name):

bpy.ops.object.select(action='SELECT')

def delete_all(self):

bpy.ops.object.select_all(action='SELECT')

bpy.ops.object.delete(use_global=True)

# bpy.ops.object.delete()

def load_all_sound(self, sound_list):

sound_object_list = []

for i, sound_name in enumerate(sound_list):

if not sound_name:

sound_object_list.append(None)

else:

sound_object_list.append(self.load_sound(sound_name, i + 1))

return sound_object_list

def load_sound(self, sound_name: str, channel: int, start_frame=0):

if not self.scn.sequence_editor:

self.scn.sequence_editor_create()

return self.scn.sequence_editor.sequences.new_sound(sound_name, os.path.join(self.base_data_path, "sound",

"{0}.mp3".format(sound_name)),

channel, start_frame)

def load_module(self,module_name):

path = os.path.join(self.base_data_path, "module")

self.load(path, module_name, type='fbx')

try:

self.delete_default_animation(module_name)

except Exception as e:

print(e)

def load_all_action(self, action_set):

path = os.path.join(self.base_data_path, "action")

real_action_name_map = {}

current_set = set(bpy.data.actions.keys())

for action in action_set:

self.load(path, action, type='fbx')

result = set(bpy.data.actions.keys()).difference(current_set)

if len(result) > 0:

real_action_name_map[action] = result.pop()

current_set.add(real_action_name_map.get(action))

return real_action_name_map

def load(self, path, action, type):

full_path = os.path.join(path, "{0}.{1}".format(action, type))

if type == "fbx":

bpy.ops.import_scene.fbx(filepath=full_path, directory=path, filter_glob=("*." + type), axis_up='Z',

axis_forward='-Y')

if type == "gltf":

bpy.ops.import_scene.gltf(filepath=full_path, filter_glob='*.glb*.gltf', axis_up='Z', axis_forward='-Y')

if type == "glb":

bpy.ops.import_scene.gltf(filepath=full_path, filter_glob='*.glb*.gltf', axis_up='Z', axis_forward='-Y')

try:

bpy.context.active_object.name = action

except Exception as e:

print(e)

def batch_test(self): # 加载一组化身模型

test = ""

path = "E:\\myFile\\CASA2022\\code\\updateAvatarGeometry" # "E:\\myModel3D\\in"

for myid in "abcdefghijklmn":

bpy.ops.import_scene.gltf(filepath=path + "\\" + myid + ".gltf", filter_glob='*.glb*.gltf')

for i in bpy.data.objects: # 激活剩下的对象

bpy.context.view_layer.objects.active = i

self.delete_parent()

bpy.ops.object.select_all(action='DESELECT') # 取消选择

for i in bpy.context.selectable_objects:

if (not i.type == 'MESH') and (not i.type == 'LIGHT'):

i.select_set(True)

bpy.ops.object.delete()

###############

for i in bpy.context.selectable_objects:

if i.type == 'MESH' and len(i.name) > 5: # The default object name is generally long

i.name = myid # 改名

for i in bpy.context.selectable_objects:

if i.type == 'LIGHT':

i.name = "test"

for j in dir(i):

test = test + "." + j

i.name = test

ModelProcessingTool().process_movie(None, None)

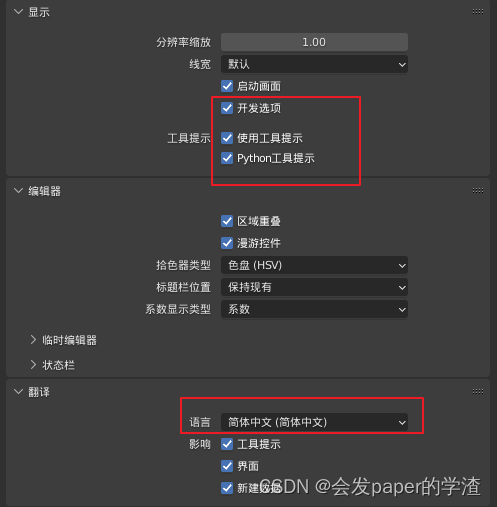

6、注意,对于不熟悉用什么代码的,可以通过blender界面设置查看操作对应的代码:

GitCode 天启AI是一款由 GitCode 团队打造的智能助手,基于先进的LLM(大语言模型)与多智能体 Agent 技术构建,致力于为用户提供高效、智能、多模态的创作与开发支持。它不仅支持自然语言对话,还具备处理文件、生成 PPT、撰写分析报告、开发 Web 应用等多项能力,真正做到“一句话,让 Al帮你完成复杂任务”。

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)