Spark Streaming-附有代码

一. 官方参考链接Spark Streaming - Spark 3.2.1 Documentationhttp://spark.apache.org/docs/latest/streaming-programming-guide.html二. Spark Streaming 核心抽象 DStream简介is an extension of the core Spark APItenables s

一. 官方参考链接

二. Spark Streaming 核心抽象 DStream

-

简介

is an extension of the core Spark API

tenables scalable, high-throughput, fault-tolerant

stream processing of live data streams.

-

流

输入: Kafka, Flume, Kinesis, or TCP sockets

//To do 业务逻辑处理

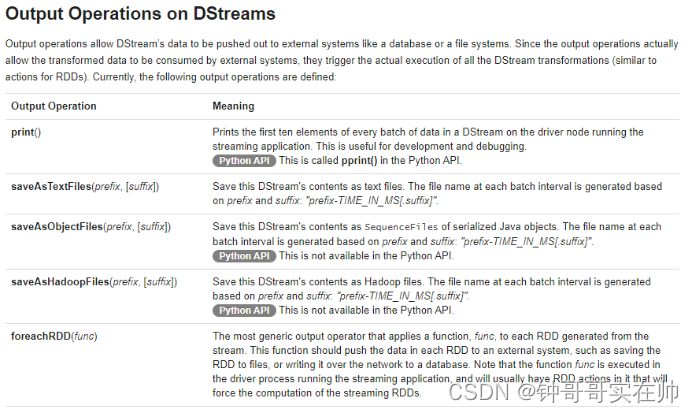

输出: filesystems, databases, and live dashboards

常用流处理操作框架

Storm: 真正的实时流处理 Tuple

Spark Streaming: 并不是真正实时流处理,而是mini batch操作(将数据流按时间间隔拆分成很小的批次)

使用spark一栈式解决问题,批处理出发

receives live input data streams

divides the data into batches

batches are then processed by the Spark engine to generate

the final stream of results in batches

Flink: 流处理出发

Kafka Stream:

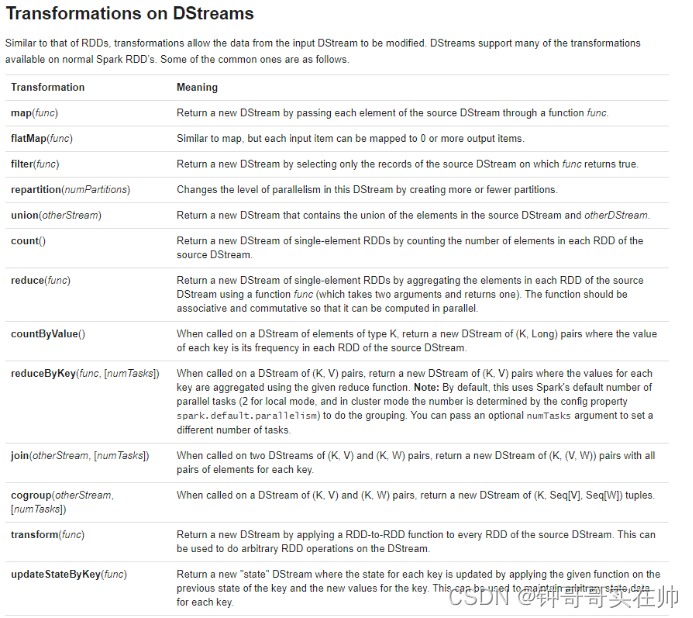

DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams.

Internally, a DStream is represented as a sequence of RDDs.

三. 范例

from pyspark import SparkContext, SparkConf from pyspark.streaming import StreamingContext,kafka if __name__ == '__main__': # nc -lk 9999 做测试 conf = SparkConf().setAppName('spark_streaming').setMaster('local[2]') sc = SparkContext(conf=conf) scc = StreamingContext(sc, 10) lines = scc.socketTextStream('localhost', 9999) words = lines.flatMap(lambda line: line.split(" ")).map(lambda word: (word, 1)).reduceByKey(lambda x, y: x+y) words.pprint() scc.start() scc.awaitTermination() # sc.stop()

GitCode 天启AI是一款由 GitCode 团队打造的智能助手,基于先进的LLM(大语言模型)与多智能体 Agent 技术构建,致力于为用户提供高效、智能、多模态的创作与开发支持。它不仅支持自然语言对话,还具备处理文件、生成 PPT、撰写分析报告、开发 Web 应用等多项能力,真正做到“一句话,让 Al帮你完成复杂任务”。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

http://spark.apache.org/docs/latest/streaming-programming-guide.html

http://spark.apache.org/docs/latest/streaming-programming-guide.html

所有评论(0)